Skills Observation Tracker

Digitizing Skills and Competency Verification for On-the-Job Learning

Project Overview

Company

Craft Education (a WGU Company) is a workforce education platform currently serving teaching, healthcare, automotive, and government apprenticeship programs.

My Role

Lead UX Designer, sole IC supporting 3 product teams. Led discovery, design, research and cross-functional collaboration.

The Challenge

Learners in healthcare on-the-job learning programs and across clinical rotations carry analog 3-ring binders filled with competency checklists requiring instructor clearance and evaluator sign-offs before graduation. Instructors need a way to grant learners clearance to practice skills on the job, which is currently done verbally. Evaluators (aka - preceptors in the healthcare space) need to know what learners are clear to practice and assess learner competency during patient care. The catch: instructors don’t have a way to check learner progress and evaluators are drowning in charting fatigue, hospital cell service and wifi are unreliable, and they're putting their licenses on the line every time they verify a learner's competency. One mistake and the liability falls on them.

Understanding the Landscape

Through discovery research and interviews with evaluators, I uncovered critical pain points:

Instructors spend hours manually tracking learner clearances across spreadsheets and paper forms.

Evaluators are already experiencing severe charting fatigue. Any additional tool friction could cause adoption failure.

Hospital wifi and cell service are unreliable. Learners and evaluators might need to use shared hospital computers or complete tasks after leaving for the day.

No centralized reporting. Program directors had no visibility into which learners were at risk of not completing competencies before graduation.

Compliance requirements: healthcare programs must maintain 5-year records of skill observations (non-negotiable regulatory requirement).

The Journey

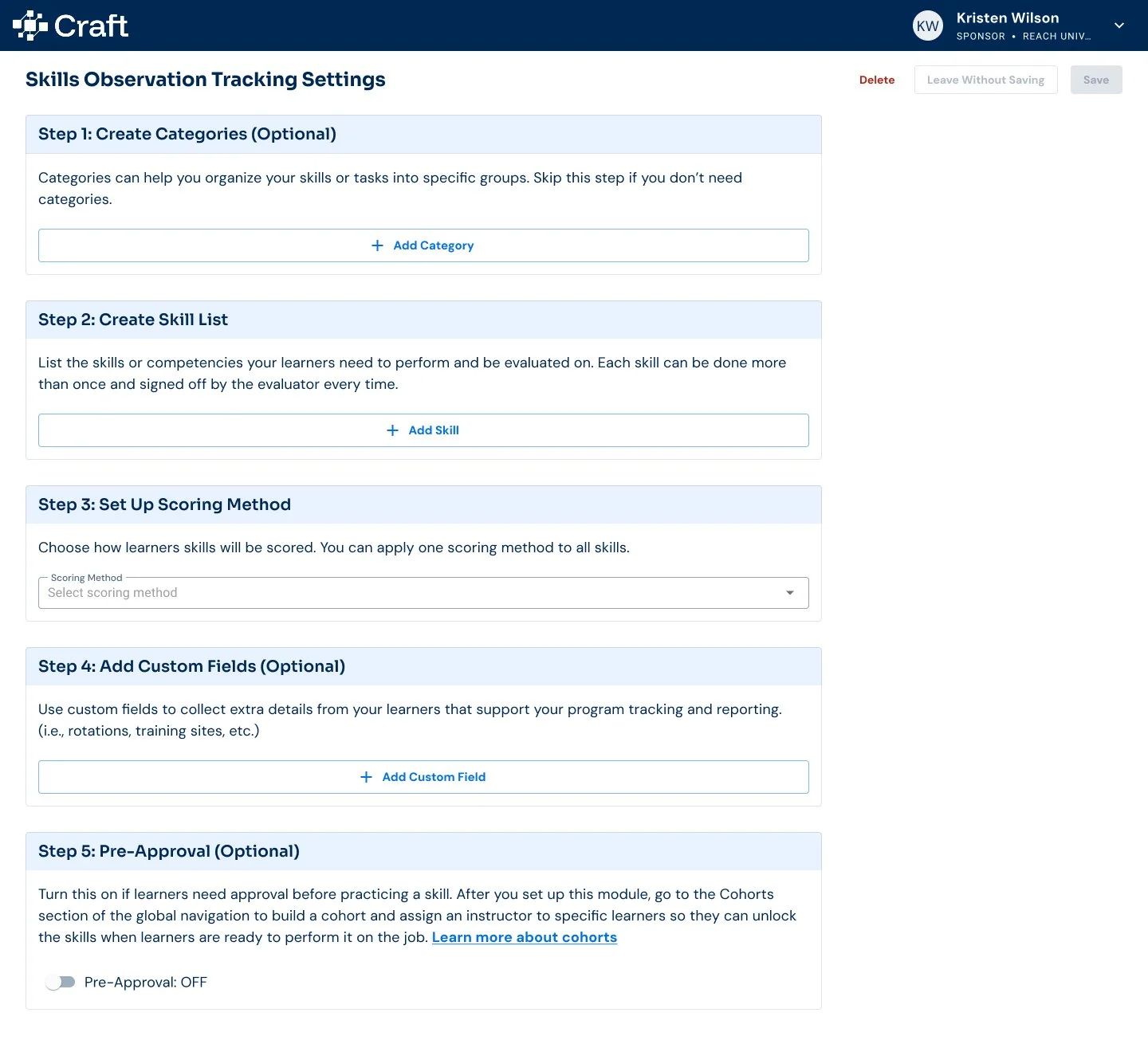

Started with a messy Miro board mapping the entire ecosystem. Admin setup, instructor clearance, learner requests, evaluator sign-offs. Complicated, but necessary to understand what we were building and for who.

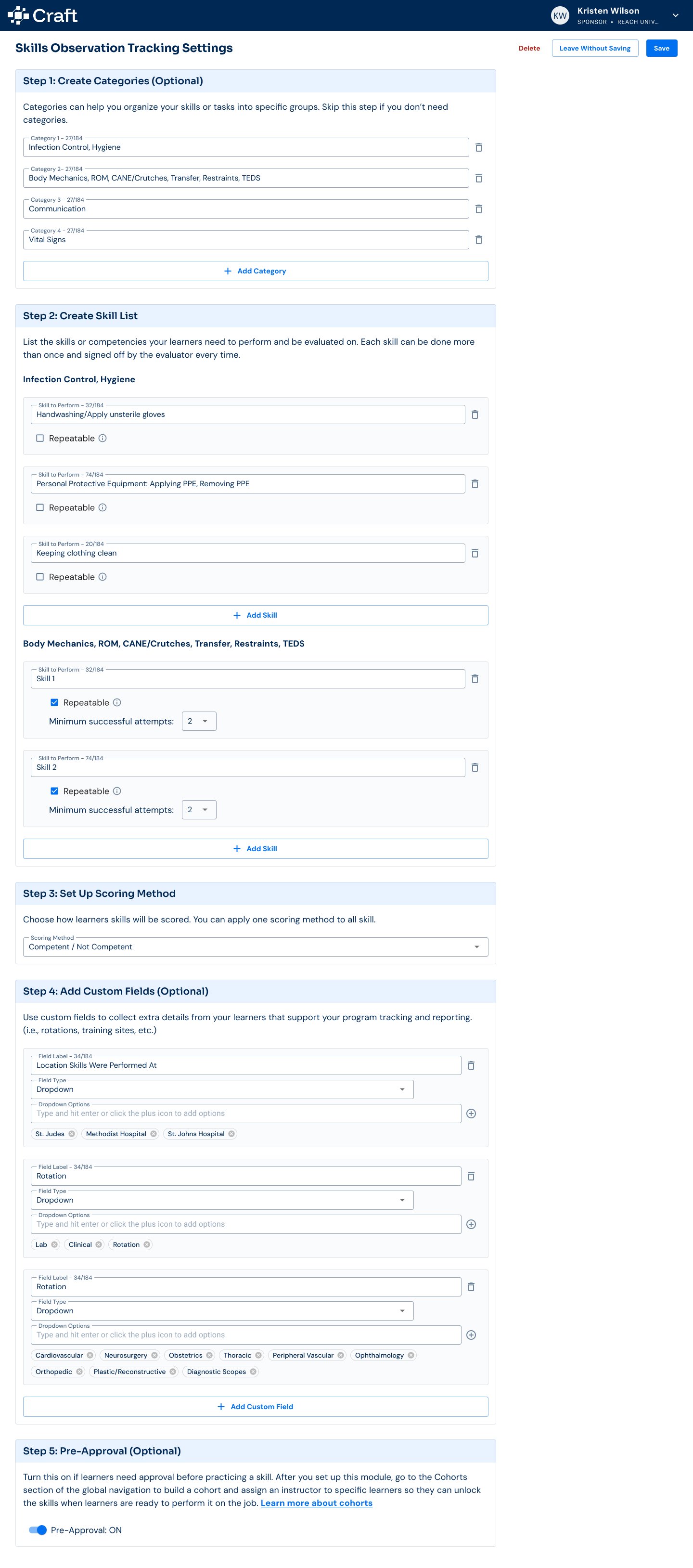

Collected every skills checklist from all customers from across different business verticals, added them into Dovetail, and surfaced patterns with their insights AI tool.

Partnered with Claude to ideate and prototype based on validated use cases. The clickable prototype became our shared language with engineering and product.

Discovered through evaluator interviews that they had no time to hunt and peck through a tool when their main job was caring for patients. An idea to use a QR code for sign-off emerged from this friction, but was later shelved due to security concerns.

Realized LMS integration wouldn't work for our use case. We couldn't rely on external systems. Everything had to live in Craft.

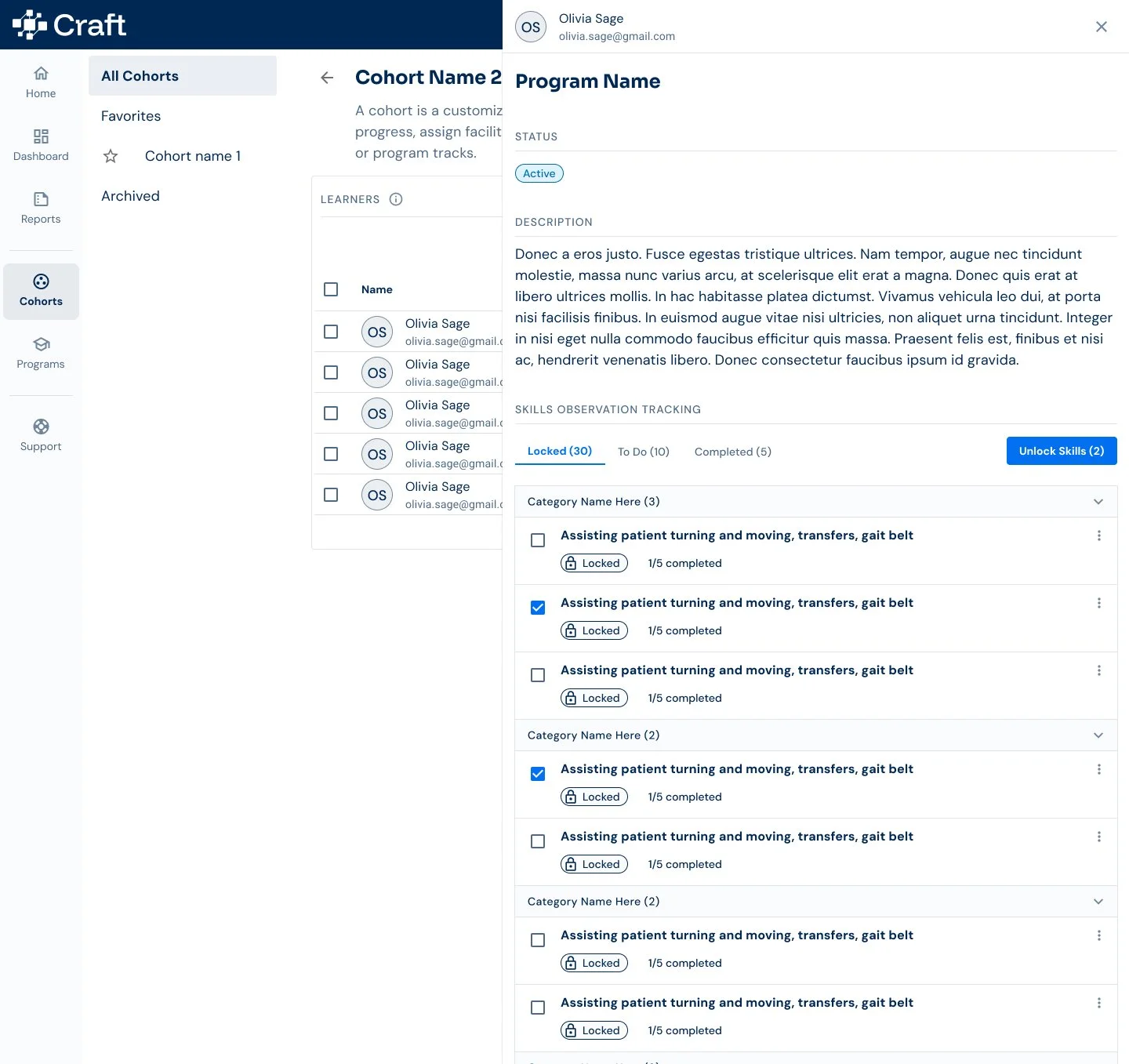

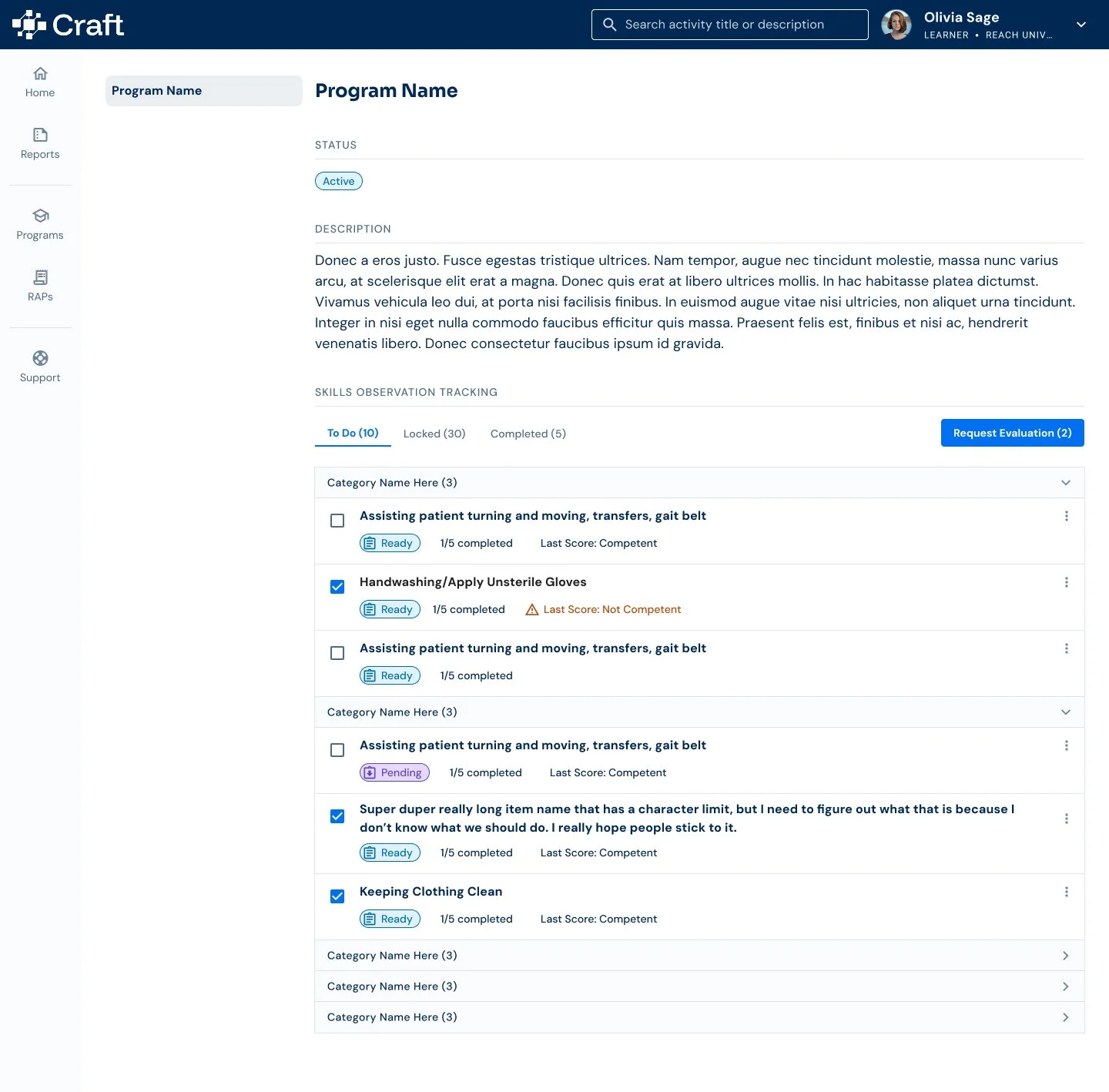

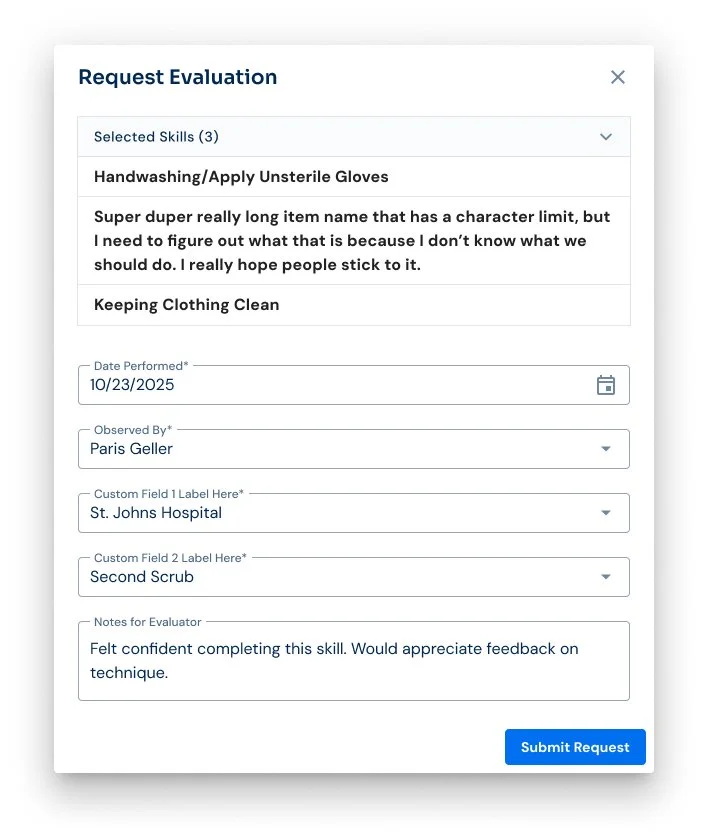

Made the strategic shift: put the work on learners. They log in, select skills, request evaluation from the specific preceptor they practiced with that day. Some shifts mean multiple preceptors and multiple requests.

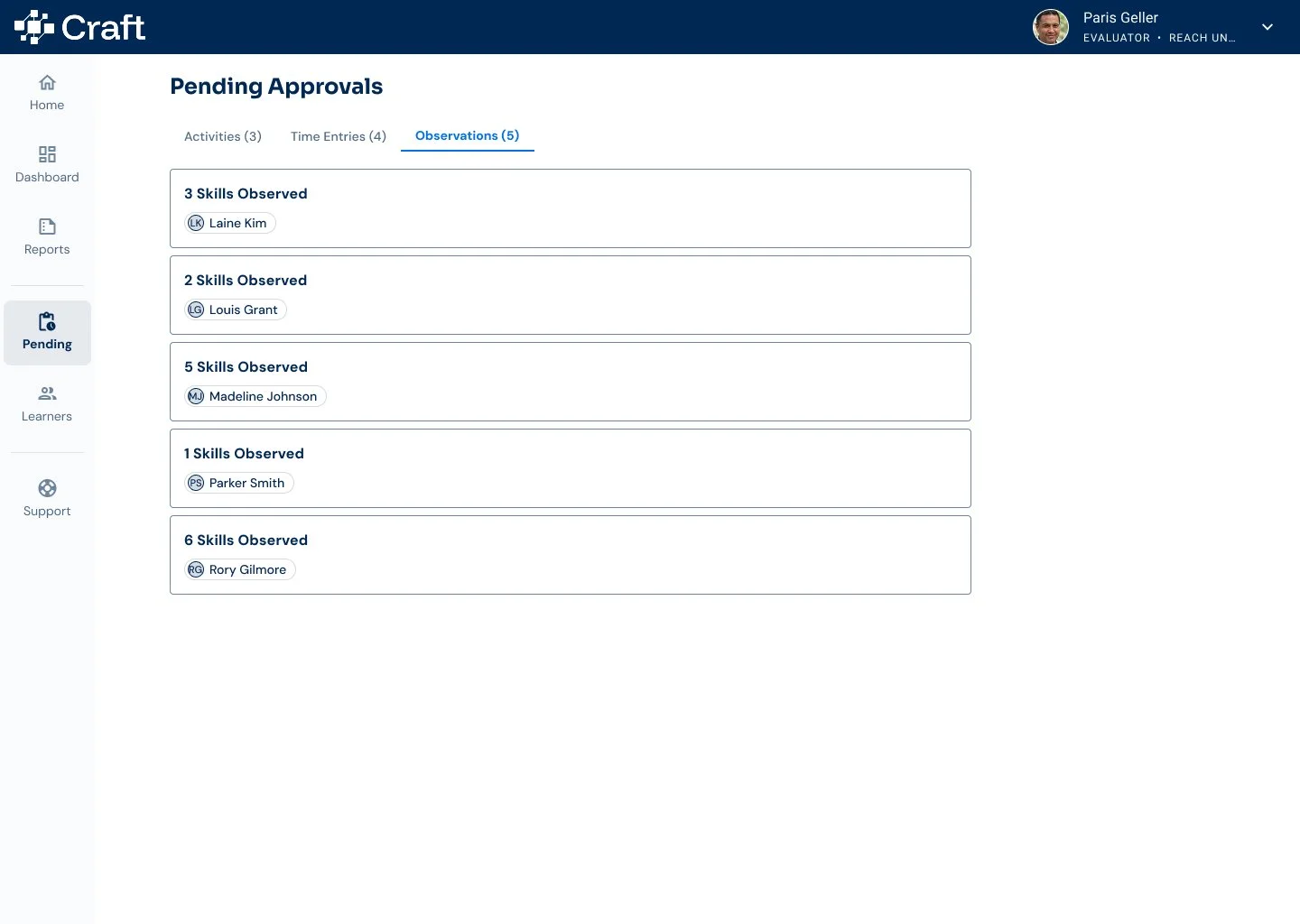

Understood that evaluators might need to score at end-of-shift on hospital computers or hours later at home due to wifi constraints. Designed for asynchronous, offline-friendly workflows.

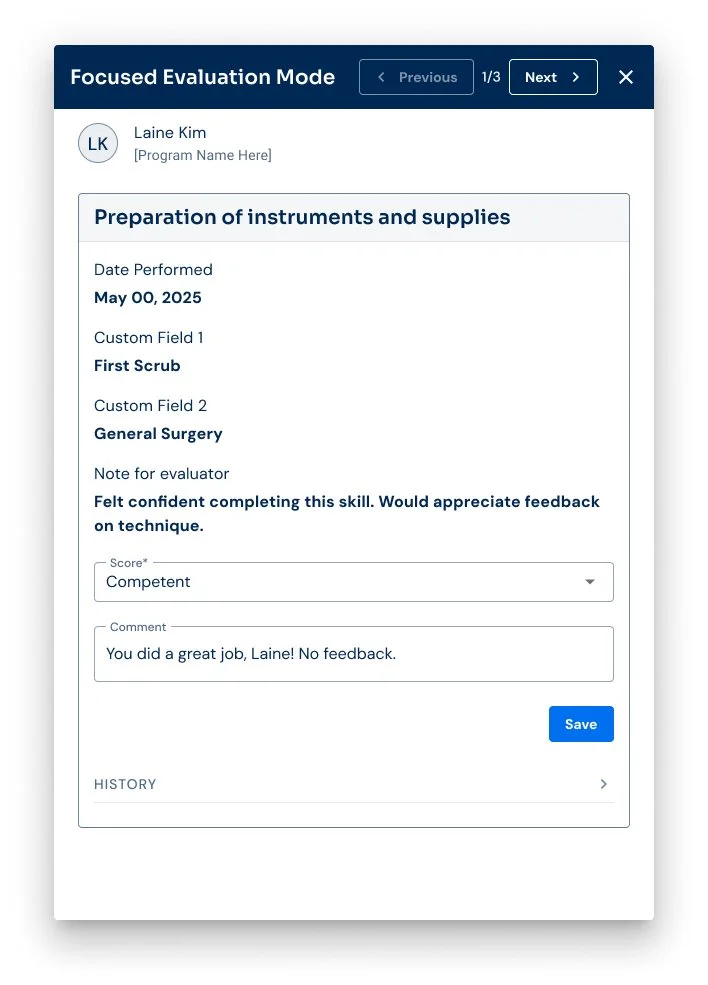

Designed every flow around one principle: minimal work for the person with the most liability. Get in, accomplish the task, get out. No extra clicks. No searching.

From Insight to Innovation

The original vision - LMS integration would push skill lists to learners, instructors would unlock them from LMS, and evaluators would receive alerts to score without logging in. Easy in theory, then reality hit:

The LMS integration didn't work - Our current data pipeline couldn't handle the flow we needed. We had to build everything inside Craft instead.

Preceptors had no time for friction - They were drowning in charting fatigue. Their job was keeping patients alive, not hunting and pecking through a tool. QR codes sounded better than logins. But they weren't secure.

Their license was on the line - If the learner made a mistake during observation, it was their responsibility. That weight meant they needed a system they could trust, not one full of friction and confusion.

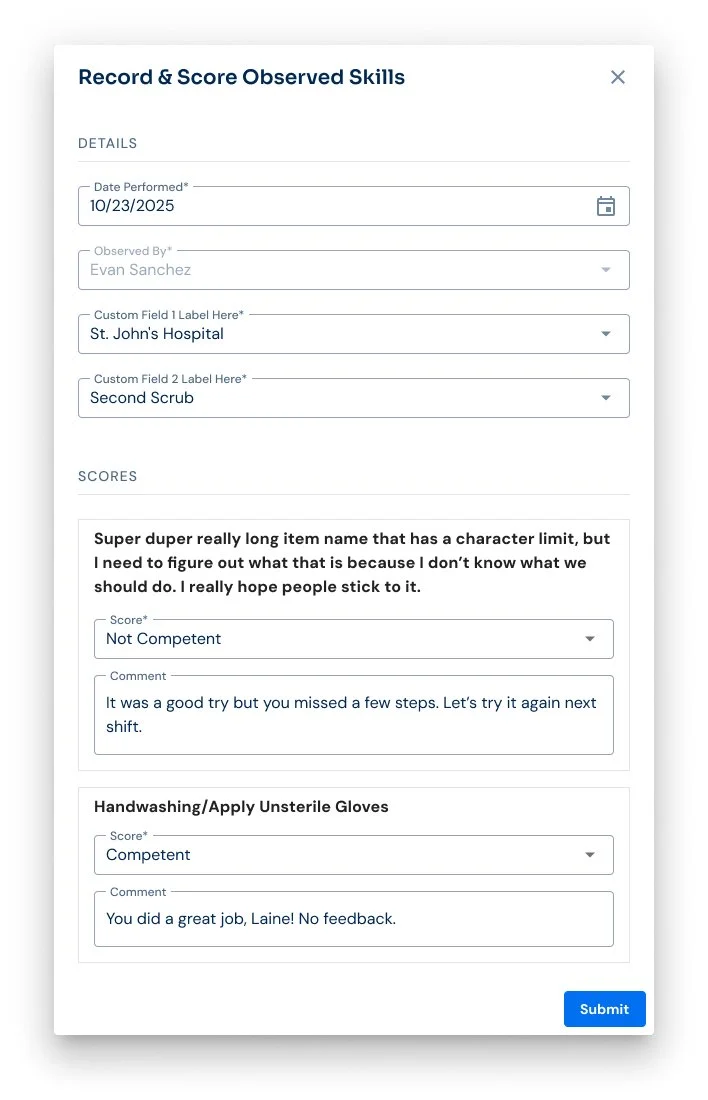

So we flipped the model. Learners became the drivers. They log in, select skills practiced that shift, and request evaluation from each preceptor they worked with. Instructors unlock skills. Evaluators score. Three roles, each with minimal ask.

Every flow had one mission: get in, accomplish the task, get out. No extra clicks. No searching. No cognitive load on top of an already brutal day. Scoring might happen end-of-shift on a shared computer or hours later at home. The simplicity of those flows is what I'm most proud of. Not despite the constraints but because of them.

The Transformation

Through iterative design and continuous feedback from preceptors and instructors, I crafted a solution that:

Shifted responsibility to learners as the activation point, reducing the burden on the person with the most liability (the evaluator).

Simplified the sign-off and scoring process into the most frictionless experience possible, knowing evaluators score after shifts on unreliable hospital networks.

Enabled compliance-ready audit trails without adding friction to clinical observations.

Set the foundation for fast-follow enhancements based on real usage data from 200+ learners across QCC, WGU Health, UW Health, and Air Force training programs.

Outcomes & Future Impact

This project reinforced critical lessons:

Talk to the people with the most to lose - Preceptors putting their licenses on the line taught me more about UX constraints than any stakeholder meeting. Their fears and time pressures shaped every decision.

Flip the model when the original doesn't work - The LMS integration dream was elegant. It was also impossible. Shifting responsibility to learners required more complexity upfront but solved real problems.

Constraints breed clarity - Having to ship by January forced prioritization. The best features were often the ones I didn't build. Deferred at-risk learner flagging, and admin and instructor analytics to fast-follow. Enough to validate, lean enough to ship.

Design for the actual environment, not the ideal one - Cell service and wifi in hospitals is unreliable. Evaluators work on shared computers. Scoring happens at midnight at home. Every design decision accounted for reality, not wishful thinking.

Simple flows matter more than simple features - I could have shipped a flashy dashboard or advanced reporting. Instead, I obsessed over making three basic workflows (learner request, evaluator score, instructor unlock) so painless that adoption would be automatic.

The Skills Observation Tracker launches January 2026 to 200+ learners across nursing and government training verticals. It's the second validation of our modular architecture. Fast-follow roadmap includes at-risk learner alerts, and admin and instructor analytics based on real usage patterns.